100,000,000 The race to save children behind the staggering number

Our CyberTipline at the National Center for Missing & Exploited Children has surpassed a daunting new milestone: 100 million reports of suspected child sexual exploitation, nearly all related to images and videos of children being sexually abused that are circulating on the internet, some even live streamed.

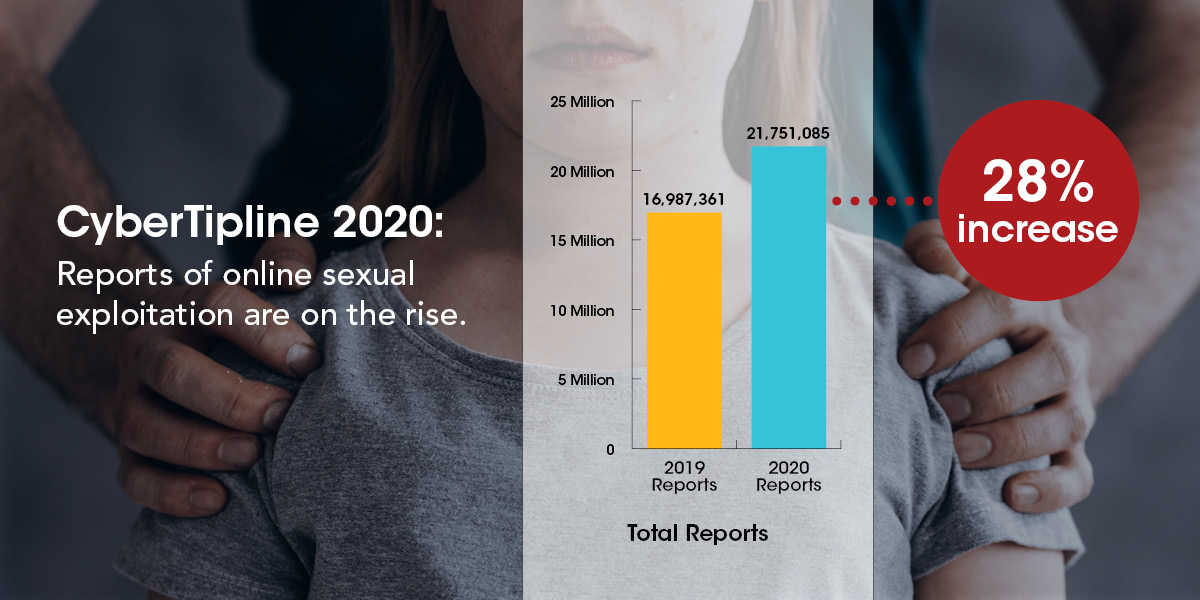

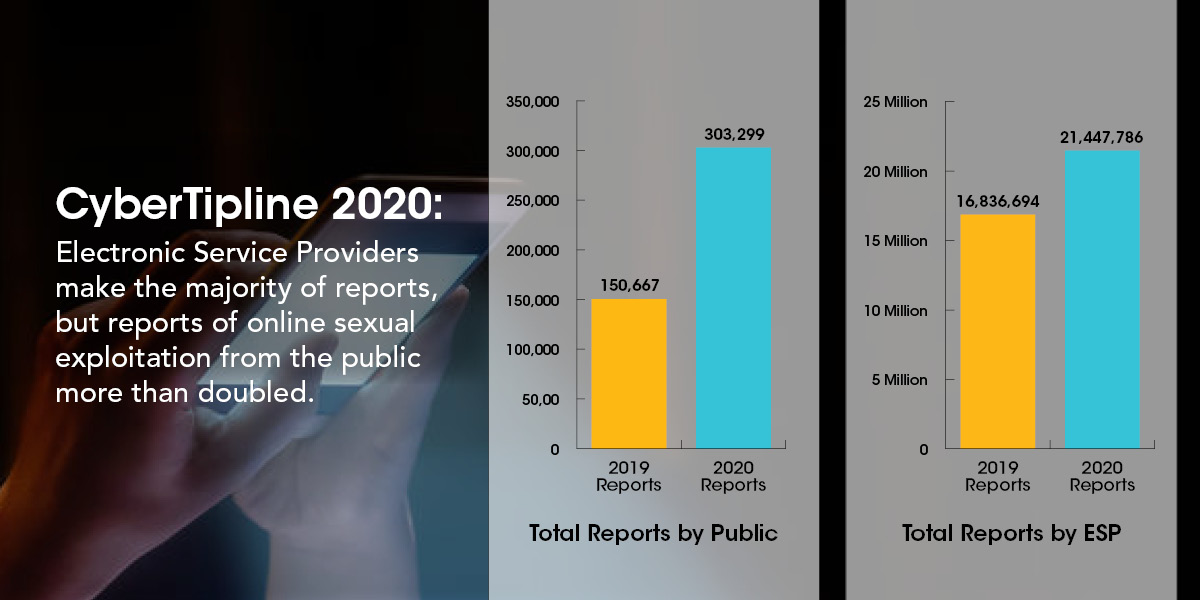

Last year alone, the CyberTipline – the mechanism in the United States for the public and electronic service providers to report these crimes – received more than 21.7 million reports. It’s a stunning indictment of the insatiable demand for this abusive imagery on the internet.

Since our CyberTipline began operating in 1998, there’s been enormous progress made to disrupt the distribution of these images and videos, known as child sexual abuse material (CSAM), and prosecute those who share the experience of victimizing children. In large part, these strides are due to technological advances that find these images online and help law enforcement identify the children around the world who are being abused.

“You can’t help but reflect when surpassing a milestone of 100 million reports relating to possible child sexual exploitation,” said John Shehan, vice president of NCMEC’s Exploited Children Division. “The countless children who’ve been recovered by law enforcement who investigated a CyberTipline report. Offenders who were identified based on a tip that prevented them from exploiting more children. I’m incredibly proud of our staff and the support we’ve received from our public and private partners.”

In addition to 21.7 million CyberTipline reports last year, we also received requests from law enforcement to review more than 15 million images and videos. Our analysts help determine if the children in CSAM – which comprise 99 percent of our CyberTipline reports ¬– have been previously identified or if they’re new victims.

“Some images we’ve seen tens of thousands of times, and we know the victim has already been recovered,” said Shehan. “It’s important to weed through all the data to identify newly produced images and videos so law enforcement can find victims who are being actively abused.”

Getting help quickly to these children is paramount, and the sheer volume of reports and millions of images present significant challenges. NCMEC analysts use a raft of cutting-edge technology tools to triage the CyberTipline reports and to determine where the content is originating from so they can make it available to the appropriate law enforcement agency for potential investigation. They’re continually reviewing incoming reports to make sure children in imminent danger receive first priority.

Technology has also become critical for law enforcement to keep pace with the volume of files as they’re investigating these crimes in the field. There are many tools available to assist law enforcement, and an example of a new, innovative approach comes from Cyan Forensics (https://cyanforensics.com), a U.K.-based technology company and NCMEC partner.

Cyan’s digital triage tools were created by an ex-law enforcement digital forensics specialist who wanted to tackle some of the growing issues faced by law enforcement today. These tools help them execute search warrants much more quickly and effectively to determine if known child sexual abuse images are on a suspect’s computer, phone or other devices.

“Simply put, Cyan’s digital triage tools empower frontline staff to find evidence fast, driving good decision making and helping safeguard children swiftly,” said Ian Stevenson, CEO of Cyan.

Cyan’s digital triage tool used here to scan devices in a suspect’s home.

Cyan’s technology scans a computer hard drive for known data extremely fast and can even find deleted data. The tools are relatively simple for law enforcement to operate, using a Contraband Filter and statistical sampling at block level.

Detective Maurice Kwon, digital forensic supervisor with the Los Angeles Police Department, said triage tools help law enforcement executing a search warrant more quickly identify any victims living in the home. They also eliminate devices that don’t contain CSAM rather than having to “bag and tag” every laptop and phone in the home.

“We want to minimize the impact on families,” said Kwon. “We plug in the triage tool, and it immediately starts scanning for CSAM. Mom’s going to need her phone. Kids need their digital devices for school. Now we can eliminate them.”

Terrance Dobrosky, senior DA investigator with the Ventura County High Tech Task Force, said Cyan technology works at block level, which will detect CSAM even if an image has been partially deleted or altered, unlike with file hashing. His lab decided to try Cyan’s technology.

“So far it’s actually been very, very promising,” said Dobrosky, whose team is building a Contraband Filter to use with Cyan’s tools. “Working at block level is a lot faster. You’re going to get more success, a lot more hits.”

On the minds of law enforcement, tech companies, our analysts and anyone tackling this issue is the suffering of the children in these images and videos. Though it’s legally known as child pornography, we believe that CSAM more accurately describes the severity of these crimes. These are not pictures of babies in a bathtub; these are crime-scene photos.

Even after the abuse has been stopped, survivors of CSAM are stuck in a unique cycle of trauma. In communities around the globe, survivors live with the debilitating fear that the photos and videos memorializing their sexual abuse as a child and shared on the internet will forever remain online for anyone to see.

Many of these children are re-victimized as their images are shared again and again, often well into adulthood, even decades later, and they constantly worry someone who has seen their images will recognize them anywhere they go. Hear their terrified voices:

- “I try to live as invisibly as possible, try to impress upon myself that the chance of recognition is really very small since I’m much older now. But the feeling persists.”

- “I do not want to socialize; I’m scared to step out of the door.”

- “I worry about this every day. I’m afraid for my children’s safety, try to avoid going out…(I’m) really paranoid when I take my kids to places like the zoo.”

- “I try to cover my face with my hair.”

If you see something online that you believe is child sexual exploitation, you may wonder, who can I call? The child you see on the internet is not likely being abused where you live but in another state or even in another country.

That’s why our CyberTipline, www.cybertipline.org, was established to give you a way to safely report the incident without sharing the images. Even if you’re not sure, please report to us. You could save a child from unfathomable abuse.

#

(We’re offering new training for professionals who support survivors experiencing this unique kind of trauma. To register, go to https://bit.ly/3p4e3I8)

NCMEC technology partners helping in search for children and their abusers:

- Videntifier: Our video matching service whose assistance in matching videos has greatly reduced the number of files needing manual review https://www.videntifier.com/

- Google Hash Matching API and Microsoft PhotoDNA: Our image-matching technologies that match incoming files so we can focus manual efforts on brand new or never before seen files https://protectingchildren.google and https://www.microsoft.com/en-us/research/project/dna-storage/

- Griffeye: Our file categorization tool which allows us to categorize, prioritize and tag files with metadata https://www.griffeye.com/

- Palantir: Our data mining and analytics partner which helps identify urgent and high priority cases and allows us to catalog, search and query the large amount of data in our system in a quick and seamless way https://www.palantir.com/ncmec/.